AI assistants are part of the B2B decision making unit. They are buyer enablement co-pilots, supporting buyers from discovery through to contract negotiation.

When ChatGPT, Perplexity or Google AI Overviews answer technical purchase questions they reach past glossy top of funnel pages and often cite deep documentation and user generated content instead. Vendors with clean, structured, easily crawlable product docs could win a disproportionate share of these citations. Treating product/technical documentation as a marketing asset has sometimes been done by teams focusing on long tail SEO, and with great impact. But considering how it fits into large language model optimisation and a generative engine optimisation strategy could now be table stakes.

The AI Search Shift

Picture this: your CTO opens ChatGPT and types “Which Kubernetes platform offers native GitOps, SOC 2 compliance and a Terraform provider?”. In a few seconds the AI tool replies with a ranked shortlist, citations and integration caveats, all without a single Google click. Look at the footnotes or citations and you will see GitHub issues, vendor documentation and Stack Overflow threads outnumbering slick hero pages and keyword stuffed blog posts.

AI search tools are rewriting the visibility rulebook and an asset they prize that is particularly relevant to B2B software companies could well be deep, technically rich documentation that answers very contextual user queries.

How AI Assistants Cite Product Documentation

We know from our own client research at FirstMotion that often technical product documentation or marketplace integrations are picked up and used as a citation source by LLMs.

Take for example some research into the Contract Lifecycle Management software category we undertook recently.

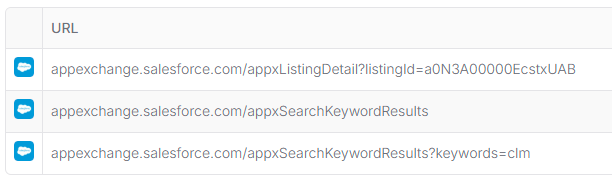

For an evaluation stage prompt: which CLM solution integrates best with Salesforce, we saw Salesforce’s own AppExchange being referred to as a source by ChatGPT:

And for a similar prompt: CLM software that integrates well with Microsoft 365 for in house legal teams

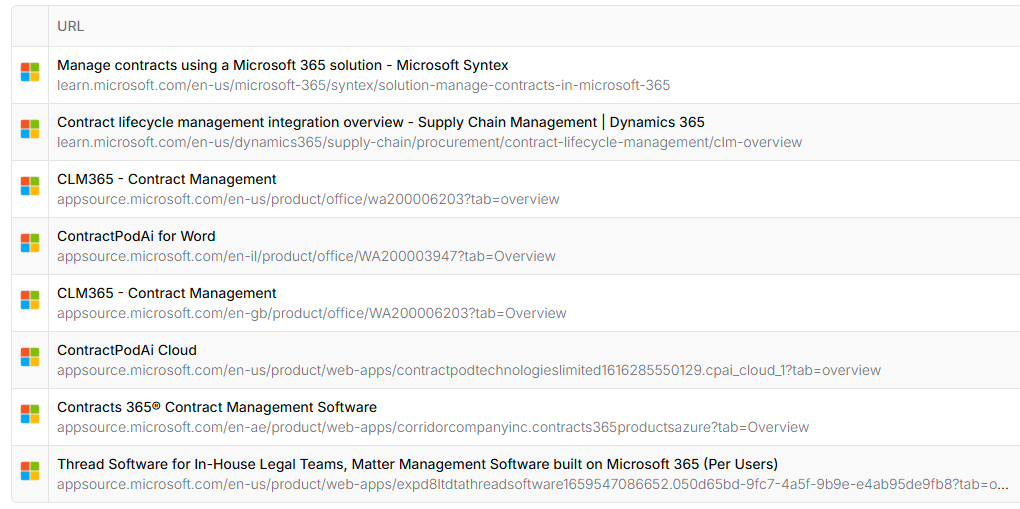

Microsoft’s own ‘Learn’ documentation and ‘AppSource’ integrations marketplace are referenced as sources by ChatGPT:

However, if you type these same prompts as searches into Google, these sources are much less visible on page 1 of the traditional results page – if visible at all.

The Rise of Deep Pages

So traditional Google search results are still often preferring to surface blog content, whereas LLMs are relying more heavily on deeper, technical documentation.

It seems to be that large language models slice across the entire URL tree, surfacing /docs/, /api/, /help/ and even changelog fragments when those paragraphs align with the user’s question. Google AI Overviews can perform similar deep linking, bypassing the homepage entirely. Shallow ‘menu level’ pillar pages, and sometimes even blog posts, simply do not contain the detail models need to answer certain user queries.

Technical Documentation as an AI Search Visibility Cheat Code

Structured product docs hit the sweet spot between semantic density and crawlability. They are written for developers, packed with explicit feature labels, and sprinkled with code snippets that double as context tokens. That makes them perfect fodder for retrieval augmented generation.

When a late‑stage buyer asks about tile rendering speed or GDPR compliance the model can quote the exact paragraph that answers it – and Mapbox wins a seat at the shortlist.

llms.txt – What, Why, How

llms.txt is a technical file that serves as a guide for large language models (LLMs), helping them efficiently discover and prioritise high value content on your site. Think of it as the inverse of robots.txt: rather than telling crawlers what to avoid, it actively tells them what to focus on.

For B2B software companies, this could include API references, integration guides, pricing breakdowns, SLAs, and security documentation. These pages are often buried in subfolders or gated behind obscure navigation paths, which means LLMs may miss them during general crawling. With llms.txt you create a clear index of evergreen, citation worthy content that LLMs can parse and embed into their knowledge. The practical benefits are twofold: it could increase the likelihood of being cited in AI generated responses, and it gives you control over the narrative touchpoints LLMs use when summarising your product.

Rethinking ‘noindex’

Some teams historically cloaked product or technical docs behind noindex for fear of SEO cannibalisation or spreading Google’s crawler too thin. That reflex probably now belongs to a pre‑AI era. Today, blocking crawlers may not be as helpful, when the AI models themselves have the capability to consume much more data, and more easily understand what’s relevant and what’s not.

Conclusion – The Existential Risk of Staying Invisible

B2B buying is converging around conversational search and AI tools are now buyer enablers in AI native buyer journeys. If a model cannot cite you, you’re missing an opportunity.